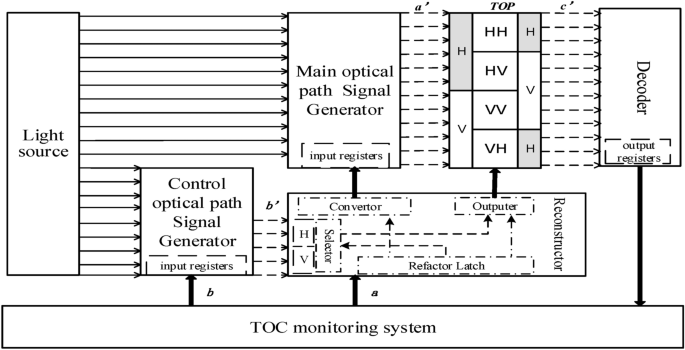

Experiments were conducted using a dual-center computing model that combines a standard electronic computer with a Ternary Optical Computer, specifically the TOC-SD16. The electronic computer used a 64-bit Windows 10 system with an Intel Core i7 processor and 8GB of RAM. The TOC-SD16 is designed so that three adjacent pixels in a row constitute a processor bit, intended to simplify data visualization. Each pixel in the TOC-SD16 can emit horizontally polarized light, vertically polarized light, or no light, each with potentially different intensities. Each processor bit produces only one output state: no light, vertical, or horizontal polarization.

The experiments involved four basic computational tasks: addition, subtraction, logical AND, and logical OR. For addition and subtraction, 8-bit MSD (Modified Signed Digit) numbers were used, with 200,000 pairs of data for each operation. For logical AND and OR operations, 8-bit three-valued data was employed, with 100,000 pairs of data for each.

The experimental process involved several key stages. First, an auxiliary software plug-in was used on the electronic computer to generate a specific data file. This file was then used to establish a connection between the electronic computer and the TOC-SD16. Once connected, this data file was transmitted to the TOC, where it was received and parsed. Based on the file’s contents, the system determined the calculation rules and data required. Processor bits within the TOC-SD16 were then allocated and configured to perform the specified calculations.

For addition and subtraction, sets of 44 processor bits were allocated for each 8-digit operation. For logical AND and OR, 8 processor bits were allocated per 8-digit operation. To enhance efficiency for the larger datasets of addition and subtraction, these operations were assigned two sets of operators, while logical operations were assigned one each. A composite operator consisting of 192 processor bits was created. This configuration was then used to reconstruct the optical processor for computation.

Data from the prepared file was processed sequentially. The system generated data frames which were sent to the corresponding operators for computation, and the results were obtained as light beams. For example, in addition, MSD numbers were used as input. The data was organized into screens, with input data grouped for parallel processing. MSD additions required a three-step process, while logical operations were completed in one step.

Experimental results from the first three screens were captured and analyzed, demonstrating close agreement with theoretical predictions for transformations and operations performed by the system.

After calculations were complete, a result file was generated and returned to the electronic computer. The optical processor’s control software decoded the output light beams into binary results, which were then collected into the result file.

A single basic module of the TOC-SD16 contains 192 processor bits, and up to 64 modules can be installed, potentially creating 64 identical composite operators. For the experimental tasks, this parallel processing significantly reduced the number of operation cycles required compared to traditional methods. The dual-center model completed the tasks in a fraction of the clock cycles and with a significantly smaller fraction of computing resources compared to a traditional computer performing the same calculations.

The experimental results confirmed the correctness of the dual-center model. This approach leverages the strengths of both electronic and optical computing, allowing the electronic computer to handle control and resource management while the optical computer carries out the computationally intensive tasks. This also allows users to maintain familiar programming practices on the electronic computer.

While effective for data-intensive repetitive calculations without intermediate iterations, the current method using data files has limitations for applications requiring multiple iterations or when the optical computer’s capacity for calculations after each reconstruction is exceeded. For future improvements, incorporating a large memory directly into the optical computer is suggested to store intermediate results, potentially eliminating the overhead of file generation and parsing and further enhancing the system’s performance.

Source link